Understanding Public Opinion Surveys

The following is a longer version of an article that originally appeared in October 2006.

Election season is upon us, and there’s no shortage of polls telling us what we think. But judging the accuracy of all these numbers requires a basic understanding of the science of polling.

The first known opinion poll was a presidential "straw vote" conducted by the Harrisburg Pennsylvanian in 1824. The newspaper simply surveyed 500 or so residents of Wilmington, Del., without regard to demographics. The results showed Andrew Jackson leading John Quincy Adams by 66 percent to 33 percent.[1] (William Crawford, secretary of the treasury, and Henry Clay, speaker of the U.S. House of Representatives, also were candidates.)

The poll accurately predicted the outcome of the popular vote, but none of the candidates actually won a majority of the electoral vote. Consequently, the election was decided by the House of Representatives, which chose Adams.[2]

The poll accurately predicted the outcome of the popular vote, but none of the candidates actually won a majority of the electoral vote. Consequently, the election was decided by the House of Representatives, which chose Adams.[2]

Election polling was popularized in the 20th century by the Literary Digest, a weekly magazine published by Funk and Wagnalls. Beginning with the re-election of Woodrow Wilson in 1916, the Digest correctly predicted the result of every presidential campaign until 1936. Its polling consisted of mailing millions of postcard "ballots" nationwide (along with a subscription form) and tallying the responses. Many considered the Digest poll to be unassailable.

So it seemed until 1936, when the Digest forecast a landslide win by Alfred Landon over Franklin Roosevelt.[3] In contrast, a young George Gallup predicted a Roosevelt victory based on a "representative sample" of 50,000 people — that is, a group that resembled the population at large. Yet his prediction was widely ridiculed as naive.[4]

In hindsight, the Digest’s error is easy to spot: Its mailing list was comprised of households with telephones, cars and magazine subscriptions. In the midst of the Great Depression, those with money to spare for such relative luxuries hardly represented the voters who would favor Roosevelt and his New Deal.

The Literary Digest folded soon after Roosevelt’s election. What was true then is true today: A flawed sample cannot be corrected simply by increasing the number of respondents.

The Gallup organization suffered its own embarrassment in 1948, when it predicted a victory by Thomas Dewey over Harry Truman. Gallup claimed the error resulted from ending his polling three weeks before Election Day. Others faulted his use of "quota sampling," by which respondents were targeted based on gender, age, race and income. This type of sampling can be useful when comparing differences of opinion between specific demographic groups; it is not accurate, however, for estimating how many people within an entire population hold a particular viewpoint.

The use of quota sampling for elections was largely replaced after 1948 by "random sampling," in which all segments of the population have a reasonably equal chance of being surveyed.

Sample Methods

Whereas the Literary Digest used postcards and Gallup posed questions face-to-face, most polls today are conducted by telephone. Calls are typically made by "random digit dialing," the process by which computers generate wireline telephone numbers. This method improves the chances of reaching households with unlisted numbers or new service. But the substitution of cell phones for traditional land lines among some segments of the population has lately raised questions about the ability of random digit dialing to produce an unbiased sample.

The term "sampling error" refers to instances in which the poll results from a particular sample differ from the relevant population just by chance. For example, a majority of respondents in a particular sample might have supported Alfred Landon even if a majority of the overall population supported Franklin Roosevelt.

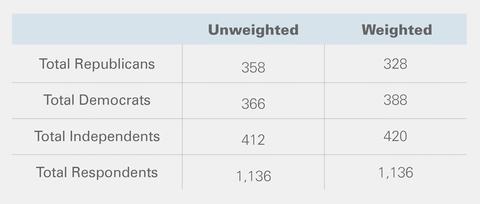

To achieve more accurate findings, pollsters adjust their results to more closely reflect key demographic characteristics of the overall population. For example, if a survey is written to measure political views, and if 40 percent of all registered voters at the time of the survey are Democrats, the results will generally be more reflective of the entire population if the sample is "weighted" so that Democrats effectively make up 40 percent of the responses to any given question.

In the following table, the "unweighted" column contains the number of respondents in the sample according to their party affiliation. The "weighted" column, in contrast, contains the number of respondents after weights are applied to more accurately reflect the actual population.

Survey results based on samples that have been heavily weighted are generally considered less reliable. Consequently, it is helpful to know how a sample has been weighted in order to judge the precision of the poll.

Most polls are reported with their "margin of error." This is an estimate of the extent to which the survey results would vary if the same poll were repeated multiple times. If a pollster reports that 75 percent of voters are dissatisfied with Congress, a ±3 percent margin of error usually means that there is a 95 percent chance that the true measure of voter satisfaction falls between 72 percent and 78 percent.

The margin of error accounts only for a potential error in the random sampling, not for survey bias or miscalculations. The margin of error is typically formulated based on one of three "levels of confidence:" 99 percent, 95 percent or 90 percent. A 99 percent level of confidence (the most conservative) indicates that the survey results would be "true" within the margin of error 99 percent of the time.

In comparing the results of two or more surveys, it is important to recognize that the margin of error that would apply to both sets of results taken together would be greater than the margin of error for either of the surveys alone.

Survey Wording

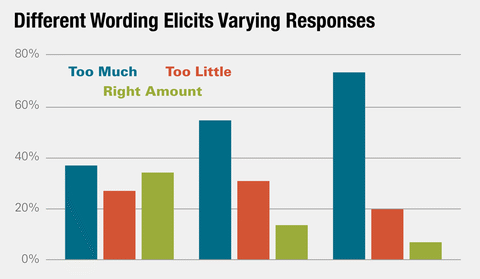

The wording of questions is crucial to interpreting poll results. Individual responses often depend on the way in which survey questions are posed. The three questions below, developed by researchers at Michigan State University, demonstrate how wording can affect poll results.[5]

-

Does Michigan spend too much, too little or the right amount on corrections?

-

Is $1.3 billion spent on corrections annually too much, too little, or the right amount?

-

Is spending $23,700 per prisoner annually too much, too little or the right amount?

From a budgetary standpoint, expenditures of $23,700 per prisoner and $1.3 billion in total are equivalent. Yet public opinion about the appropriateness of each figure varies remarkably, as does the result when no figure is cited.

Similarly, it is helpful to know the range of responses to understand fully the poll results. For example, in the table of hypothetical data below, it could be accurately reported that 45 percent of Americans have an unfavorable impression of the Federal Emergency Management Agency. In the absence of information about the range of responses, readers could easily conclude that 55 percent of Americans have a favorable opinion. An entirely different impression would be conveyed were it reported that less than one in five say their impression of FEMA is favorable.

| Very Favorable | 2% |

| Somewhat Favorable | 17% |

| Somewhat Unfavorable | 20% |

| Very Unfavorable | 25% |

| Haven’t Heard Enough | 31% |

| Don’t Know/Not Applicable | 5% |

Ideally, all of a survey’s wording and responses will be made available to the public when the survey results are published. When survey language is not made public, however, it is useful to know who sponsored the poll to help judge the survey’s reliability.

Response Bias

Survey results may also be affected by "response bias," in which respondents’ answers do not reflect their actual beliefs. This can occur for a variety of reasons:

-

The "Bandwagon Effect," in which respondents base their answers — intentionally or otherwise — on a desire to be associated with the leading candidate or cause.

-

The "Underdog Effect," in which respondents formulate their opinions out of sympathy for the candidate or the cause that appears to be trailing in support.

-

The "Spiral of Silence," in which respondents feel under social pressure to give only a politically correct answer rather than their actual opinion.

Conclusion

When conducted properly and understood correctly, polls provide a useful snapshot of popular opinion. If manipulated or misinterpreted, polls can distort reality. Voters would do well to evaluate polls based on the following 10 questions.[6]

-

Who paid for the poll?

-

How many people were interviewed for the survey?

-

How were those people chosen?

-

Are the results based on the answers of all the people interviewed?

-

When was the poll done?

-

How were the interviews conducted?

-

What is the margin of error for the poll results?

-

What is the level of confidence for the poll results?

-

What questions were asked?

-

What other polls have been done on this topic? Do they say the same thing? If they are different, why are they different?

[1] Terry Madonna and Michael Young, "Politically Uncorrected: The First Political Poll," Franklin & Marshall College, Lancaster, Pa. Available on World Wide Web: www.fandm.edu/x3905.xml

[3] The U.S. Survey Course on the Web, "Landon in a Landslide: The Poll That Changed Polling," City University of New York and George Mason University. Available on World Wide Web: http://historymatters.gmu.edu/d/5168.

[4] Sally Sievers, "The Infamous Literary Digest Poll, and the Election of 1936," Wells College, Aurora, N.Y. Available on World Wide Web: http://aurora.wells.edu/~srs/Math151-Fall02/Litdigest.htm.

[5] Institute for Public Policy and Social Research, 1997. State of the State Survey, "Attitudes Toward Crime and Criminal Justice: What You Find Depends on What You Ask," No. 97-20, Michigan State University, East Lansing, Mich. Available on World Wide Web: http://ippsr.msu.edu/Publications/bp9720.pdf.

[6] Adapted from Sheldon R. Gawiser and G. Evans Witt, "20 Questions a Journalist Should Ask About Poll Results," National Council on Public Polls. Available on World Wide Web: www.ncpp.org/?q=node/4.

Michigan Capitol Confidential is the news source produced by the Mackinac Center for Public Policy. Michigan Capitol Confidential reports with a free-market news perspective.

Do 8 of 10 Americans want an electric vehicle?

Do 8 of 10 Americans want an electric vehicle?

School survey asks high schoolers about sex, drug habits

School survey asks high schoolers about sex, drug habits

Michigan’s EV push stalls: 82,000 of 2 million goal

Michigan’s EV push stalls: 82,000 of 2 million goal